Research sheds light on future user experience

Car Design News talks to trained pyschologist and UX designer Guido Meier-Arendt about a recent study on shaping a positive user experience

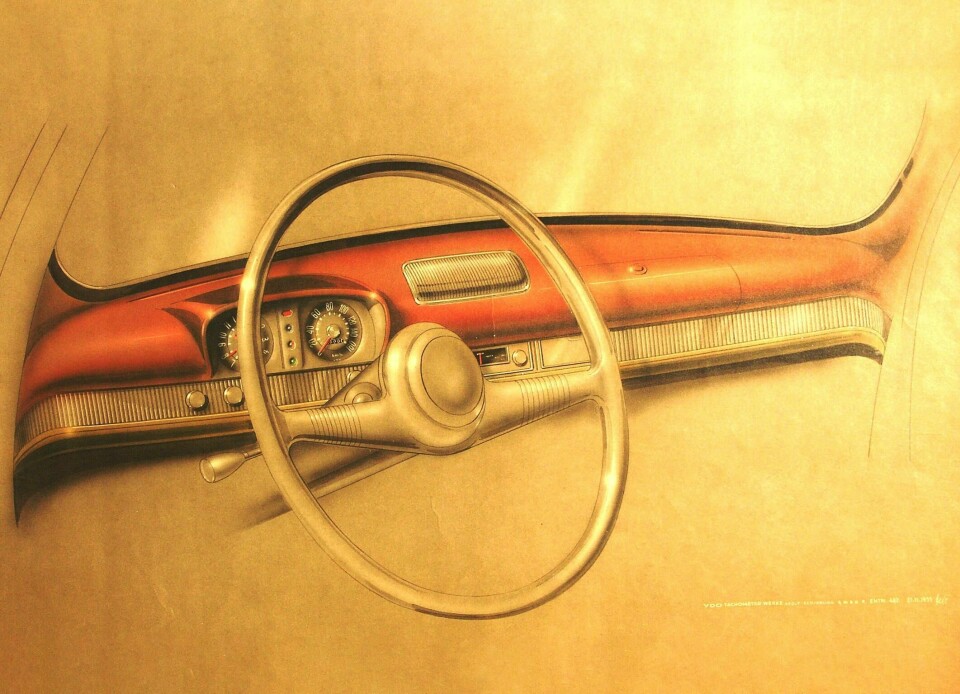

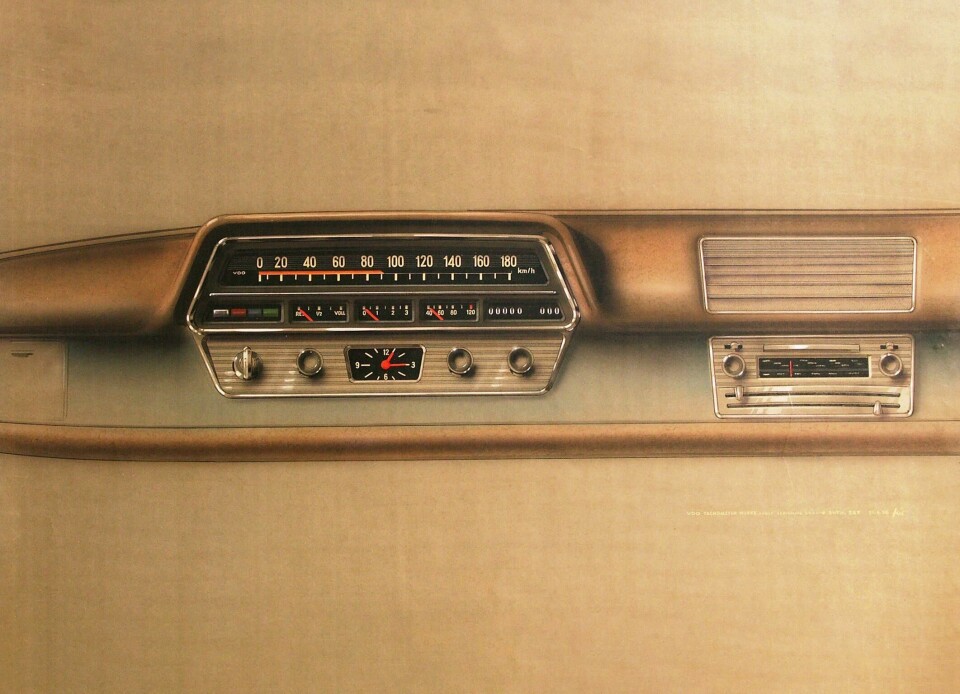

The task of operating a car is evolving from where the human is in control to more of a shared experience between man and machine.

An increasing number of tasks delegated to a computer – not only entertainment functions that can be handled by voice or gesture control but also the active safety features that play more of an autonomous function. Indeed, the human-machine interface (HMI) is becoming increasingly balanced between man and driver.

With a background in psychology, Guido Meier-Arendt, principal HMI expert at Continental, is well placed to investigate how HMI solutions should be put together. His team recently shared some new research into the overall user experience (UX), which goes beyond the more granular HMI considerations and looks at things like emotional response and consumer perception. (For example, a technology might be perfectly safe, but if it is awkward to use or looks funky, the consumer might never use it.)

“The motivation behind such research activities is to gain a deep understanding of the end user’s needs, and that enables us to develop innovative technologies that truly fit their requirements,” Meier-Arendt tells Car Design News. “We need to know that we are doing the right thing. Doing this kind of research means we can answer the question: what are the benefits in terms of safety, usability and the user experience.”

As a rule of thumb, features in the car should be intuitive to use and well-designed ergonomically, but also hedonistic factors like how new it feels, whether it creates a stimulating experience – is it joyful to use? Without the early involvement of the end user in studies like this, designers are not able to come up with solutions that genuinely fit the bill.

Studies like these also shed light on the grey areas of design and why things are used or ignored. As humans, we often can’t define exactly what we want, but do know when something doesn’t feel right. “We have to find out what people really need – even if they may not be able to articulate this themselves,” Meier-Ardent says.

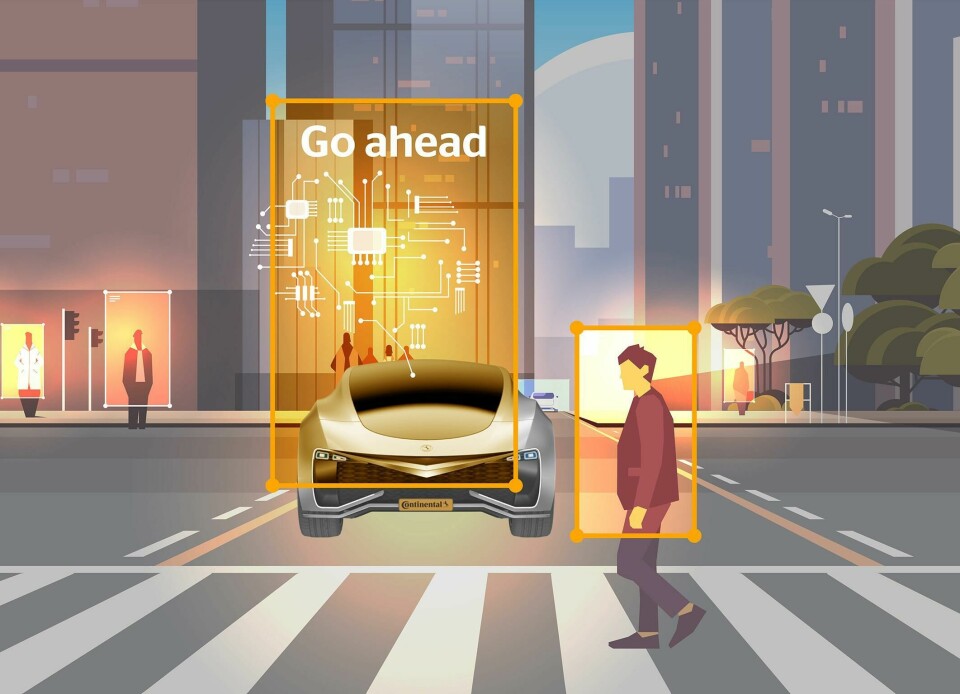

In general, he says, the transparency of driver assistance features must improve. Drivers cannot be left guessing as to whether a system is engaged or if it has noticed something further down the road. “Today, we have the ability to clearly show what the vehicle is currently perceiving and what the planned manoeuvre is,” he notes, but this might not necessarily come in the form of a message on a screen.

“We need to inform with all channels – it is not sufficient to just switch from a white light to a blue one. That’s fine as part of a wider strategy, but it’s not sufficient in isolation,” explains Meier-Arendt. “You need auditory information and haptic feedback as well – you must apply all channels on board.”

This particular scenario is safety critical and not all interactions will be quite so urgent, and the challenge will be managing how alerts and updates are given without harming the overall user experience. And particularly if that car is new to the driver (which by all accounts will be more common with the advent of car sharing). “If it is the first time they’ve used it or have experienced a certain feature, we might choose to adjust the amount of information – perhaps to simplify the messaging – and adapt the output style or modality,” suggests Meier-Arendt.

The full study, titled Positive User Experience, goes into much greater detail and can be found here.